Ampliada seccion "Visión de la industria y personal" y añadida referencias adicionales.

¿Qué es una Code Review?

Aunque existen distintos tipos y con distintos objetivos en este articulo nos vamos a referir como Code Review a aquellas revisiones de un cambio de codigo/configuracion/esquema BD que solicitamos que nos haga otro compañero antes de incorporar el cambio en el repositorio común de código. El objetivo de estás revisiones es controlar la calidad e intentar evitar que se introduccan errores en el repositorio común de código y por tanto evitar que el cambio salga a producción.

Por tanto las code review de las que hablamos:

- Intentan evitar errores/bugs actuando como salvaguarda.

- Se producen dentro del flujo para llevar cualquier cambio a producción.

Existen otros tipos de code review que no se hacen en el flujo normal de desarrollo y que suelen usarse para aprendizaje compartido del equipo. En este articulo no las vamos a tratar.

Lo más común: las Code reviews asíncronas

En este contexto, una forma muy común (y para algunos recomendada) de realizar las code reviews, consiste en que un desarrollador (A) trabaja de forma individual en una tarea (d) y al terminar el cambio general una Pull Request / Merge Request que otra persona del equipo debe revisar asíncronamente.

Podemos ver un ejemplo de esta forma de trabajar en el siguiente diagrama. En él se puede ver como el desarrollador A genera PR/MRs que el desarrollador B revisa, generando propuestas de cambios que el desarrollador A deberá incorporar.

En el ejemplo, el desarrollo a realizar está compuesto por los incrementos d1, d2, d3, y vemos como es desplegado a producción al finalizar el la code review del incremento d3.

Podemos ver que el flujo de desarrollo de A se interrumpe constantemente para esperar el feedback correspondiente a cada code review. Esto hace que el tiempo total que pasa hasta que hacemos una release a producción y conseguir feedback de verdad es muy largo.

Con esta forma de trabajar, es probable que inconscientemente exista la tendencia a trabajar en incrementos más grandes, para evitar la espera por la code review. De hecho, esta tendencia a hacer pasos más grandes es lo que me he encontrado en muchos equipos con los que he trabajado. Es una situación curiosa, por que por un lado se transmite la necesidad de trabajar en pasos pequeños y por otro lado se usan prácticas que tienden a desincentivarlos.

Por lo que la forma de trabajo más común se parece al siguiente diagrama:

Además, en este caso, al generar PRs/MRs grandes, la code review, pierde sentido y en muchos casos se convierte en una pantomima (LGTM). (Ver: how we write/review code in big tech companies)

Con esta última forma de trabajar se mejora un poco el lead time hasta poner en producción, pero a costa de dar pasos más grandes, y de perder los beneficios de las code reviews serias.

Cuando se trabaja con code reviews asíncronas la realidad no suele ser tan sencilla como lo que hemos visto en los diagramas. La realidad suele incluir muchos flujos simultáneos y cruzados en el que existe una tendencia fuerte al trabajo individual y al cambio de contexto continuo.

De hecho, no se genera ningún incentivo para que el equipo trabaje con foco en una sola historia de usuario al mismo tiempo, puesto que cada uno estaría ocupado con sus propias tareas y haciendo code reviews asíncronas para los demás.

Otro problema que suele suceder es que las code reviews no tengan suficiente prioridad y se generen colas. Esto hace que el lead time crezca y que se desplieguen a producción cambios más grandes con el incremento de riesgos que ello conlleva.

¿No hay otras opciones?

Por suerte sí que las hay, las code reviews síncronas y las code reviews continuas.

Code review síncronas y con prioridad

El primer paso puede ser dar la máxima prioridad a las code reviews y hacerlas de forma inmediata y síncrona, entre el que ha desarrollado el incremento y el encargado de hacer la review.

Con esta forma de trabajar se consigue que el lead time total de cada incremento sea menor y se incentiva trabajar en pasos pequeños.

En este caso, como efecto colateral, las code reviews deberían ser más fáciles, puesto que la explica el propio desarrollador y puede expresar con detalle por qué ha tomado cada una de las decisiones.

Esta forma de trabajar requiere mucha coordinación entre la los miembros del equipo y no es sencillo hacerlas siempre síncronas, pero desde luego es un paso sencillo si ya estamos realizando code reviews asíncronas.

Realmente es tan simple como que las PRs/MRs tengan la máxima prioridad y que en cuanto tengas una preparada, te coordines con alguien para revisarla los dos juntos en un único ordenador.

Pairing/Ensemble programming: Code review continua

Programación en parejas: "Todo el código que se envía a producción es creado por dos personas que trabajan juntas en un mismo ordenador. La programación en parejas aumenta la calidad del software sin que ello afecte al tiempo de entrega. Es contraintuitivo, pero dos personas trabajando en un mismo ordenador añadirán tanta funcionalidad como dos trabajando por separado, salvo que será de mucha mayor calidad. El aumento de la calidad supone un gran ahorro en la fase posterior del proyecto". http://www.extremeprogramming.org/rules/pair.html

Cuando trabajamos usando programación en parejas tal y como se recomienda en programación extrema, el código se diseña y desarrolla de forma conjunta por dos personas. Esta forma de trabajar genera una revisión constante del código por lo que no requiere una fase final de revisión.

Desde luego, desde el punto de vista de coordinación, es el sistema más sencillo, puesto que una vez que se han formado las parejas, cada pareja se organiza para ir haciendo incrementos, que de forma implícita van siendo revisados de forma continua.

En este caso, el diagrama que representa esta forma de trabajo sería:

A partir de la programación en parejas y viendo sus beneficios, ha aparecido una nueva forma de trabajar conocida como programación en grupo (mob/ensemble programming). En esta modalidad todo el equipo trabaja al mismo tiempo en un único cambio y usando un único ordenador. De esta forma todo el equipo colabora en el diseño de cada pieza de código limitando al máximo los cambios de contexto y minimizando el lead time de cada uno de los cambios. Al igual que en la programación en parejas, la programación en grupo realiza una revisión continua y sincrona del código.

De las formas de trabajar que hemos visto, la programación en pareja o en grupo, es la que tiene un menor lead time para cada cambio, menos cambios de contexto e interrupciones y requiere menos coordinación.

Aun así la programación en parejas y en grupo no es una práctica sencilla y requiere esfuerzo y mucha práctica. Lo que sí tengo claro es que lleva al extremo los beneficios de calidad que se suelen asociar con las revisiones de código.

Visión de la industria y personal

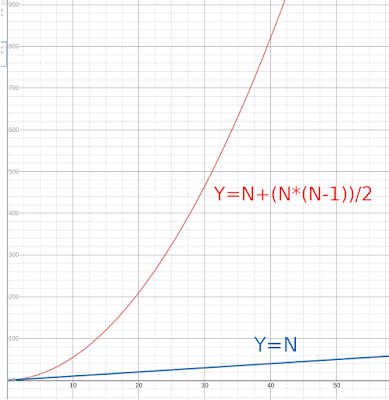

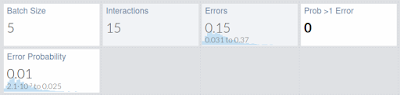

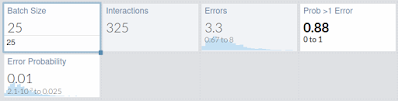

Si en una cosa parece estar de acuerdo la industria es que hacer code reviews es una buena práctica y que deberían hacer todos los equipos de desarrollo. Por otro lado también se recomienda en la mayoría de los casos trabajar en incrementos pequeños, de forma que se pueda controlar mejor la complejidad y el riesgo de cada uno de los cambios.

Teniendo en cuenta estos dos puntos, parece una buena idea usar Code Reviews y que estás sean lo más sincronas y continuas posible, de forma que podamos trabajar en esos incrementos pequeños de baja complejidad y bajo riesgo.

En mi caso, llevo unos cuantos año empujando la programación en parejas o en grupo, creando un flujo de incrementos pequeños que se revisan de forma continua.

Tanto en Alea Soluciones como en TheMotion, utilizábamos programación en parejas por defecto y programación en grupo ocasionalmente. La frecuencia de despliegue de ambas empresas era diaria, y podíamos hacer cambios considerables en pasos pequeños y seguros (3s). Además de este flujo de desarrollo constante y sostenible, obtuvimos atractivas ventajas adicionales, como la facilidad para difundir el dominio y el conocimiento técnico o hacer una rápida incorporación de nuevos miembros. En el caso de Alea Soluciones, contratamos a personas que ya tenían experician en programación en parejas y en prácticas XP, por lo que fue más fácil. Sin embargo, en el caso de TheMotion, tuvimos que introducir las prácticas XP (TDD, programación en parejas, etc.), por lo que contratamos ayuda de coaching técnico.

En Nextail había una mezcla de equipos que hacían programación en pareja y revisión continua y otros que utilizaban revisiones de código asíncronas pero las priorizando para evitar las colas.

En Clarity AI, algunos equipos utilizan revisiones de código asíncronas pero las priorizan para minimizar el lead time. Ya hay dos equipos que hacen revisiones de código continuas (utilizando la programación en parejas o en grupo) y estamos ampliando gradualmente esta forma de trabajar a otros equipos.

Por supuesto el valor estadistico de mi experiencia es nulo y es mucho más interesante analizar los resultados de analisis más serios como el DORA (https://www.devops-research.com/research.html). En ellos podremos ver como se asocian a equipos de alto rendimiento las siguientes prácticas:

Relacionado: